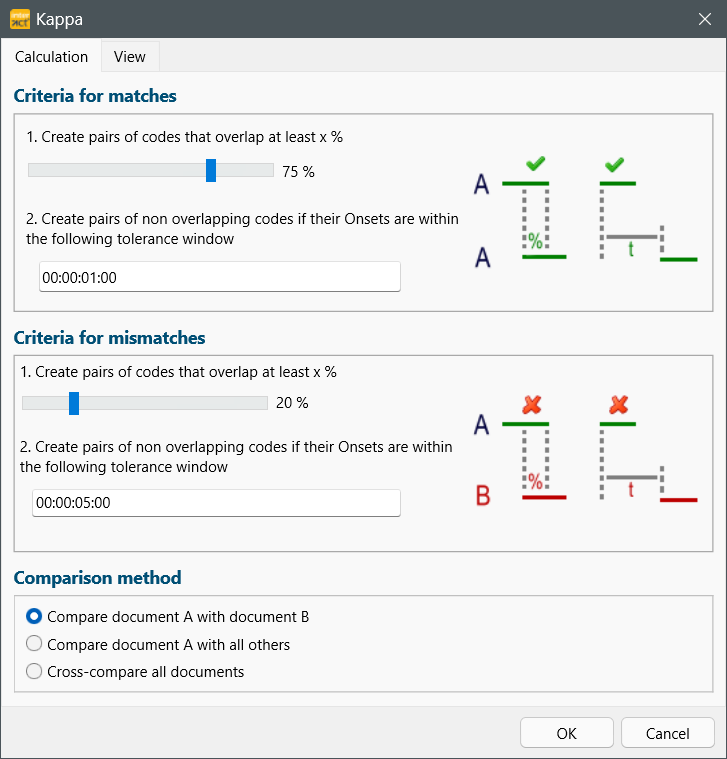

The moment you confirmed the dialog that appeared after selecting the command Analysis - Reliability - Kappa ![]() , the Kappa calculation parameters dialog appears:

, the Kappa calculation parameters dialog appears:

You can run the routine with the default settings to start with, but you probably need to adjust these parameters have to your needs. These settings can influence the resulting Kappa values tremendously!

Note: There are no general 'best' setting, because they depend on the type of Codes, length of your Events, required accuracy etc. Adjusting the parameters to fit you data best does not turn bad-data into good-data, but with the right settings you are able to get the best Kappa result value possible.

To help you understand the meaning of those settings, read the topic called Kappa Pair Finding Routine.

Criteria for Matches

This setting is the foundation for finding matching pairs:

oOverlap in percentage - For all Codes with duration, you can adjust the first slider to determine the amount of overlapping you need. If your Codes are all rather short, you need to reduce the overlap factor to get usable results.

oTolerance offset - For very short Events, your Codes might not overlap at all. The time value field, allows you to define the interval within which the matching Code need to be coded, in order to be counted as a match.

It is again important to take your type of data into account. If many things happen in a very short time, you should reduce this value in order not to deform your results.

During the routine, INTERACT first tries to build pairs based on the first ‘overlapping’ parameter and labels all pairs found. If no more pairs can be build, based on the overlapping tolerance setting, the second setting is applied and all remaining Codes are checked accordingly, to try and build additional pairs. A more detailed description can be found in the topic Kappa Pair Finding Routine.

If, after this run, there are still Codes left that have not been labeled a 'Pair', the mismatches (errors) are labeled accordingly. Codes with no counterpart at all, appear in the "No pairs" column/line and are displayed in blue in the Kappa Graph.

Criteria for Mismatches

If your Kappa results table show a lot of No pair entries,

Basically the same routine is available for labeling mismatches. Adjusting these settings, helps to reduce the no pairs, in case necessary.

Comparison Method

You can now choose across what documents can be calculated:

oCompare document 1 with document 2 - Classic way of calculating Kappa

oCompare document 1 with all others - Automatically compares the file selected as Document A in the Kappa Graph with each of the currently open documents. All codes and pairs found are accumulated in the results table and used to calculate Kappa.

oCross-compare all documents - If there is no 'leading' document, you can make INTERACT compare all documents with one another.

Start the Pair-Finding routine

▪Confirm your setting with OK to start the Pair-finding routine.

When finished, the first thing you see is a visualization of all pairs found: The Kappa-Graph

▪Read the topic Kappa-Graph for further details.