If you need to compare the observations of multiple observer (rater), INTERACT offers you an Inter rater reliability (IRR) check, based on Cohen's Kappa.

The Kappa formula can compare the data of multiple raters when:

oThe data is stored in separate files per observer:

One file for the data of 'Observer A' and a second file with the data of 'Observer B'.

oThe files are structured the same, containing the same classes and an identical number of Groups and Sets!

K = (Pobs – Pexp) / (1 – Pexp) |

Note: It is an often misunderstood issue that KAPPA is just comparing single codes between two raters. But it is not!

It is more a grade for the quality of your data and it needs a lot of data (Codes and Events) to calculate a reliable probability, on which its value is based.

KAPPA is not suited for a single Code within a Class!

Our Kappa implementation however, offers an additional overview in percentage per document, based on the pairs found, as well as a graphical view on all the pairs (correct and wrong ones), for better understanding.

Changing the default parameters, so that they suit your data best, enables you to influence the Kappa value.

Note: There is no such thing, as an overall Kappa for multiple Classes. Mr. Cohen designed the Kappa formula for sequential, exhaustive codings. INTERACT data, when split over multiple Classes, is usually not sequential nor exhaustive.

Kappa calculation largely depends on probability calculation.

This means that the resulting value is more realistic for larger data pools.

IMPORTANT: To improve the relevance of the resulting KAPPA value, merge multiple observations from the same study.

To create separate compilation files per observer: Merge the relevant files for 'Observer A' in one compilation file and merge the observations of 'Observer B' in a second compilation file.

DO NOT Merge files from different coders!

Make sure the order of the Sets (=Session) is the same in both files and that the spelling of the codes is identical. The KAPPA routine first compares the data Set by Set, then accumulates the pairs and errors found for the Kappa calculation.

Run Kappa

To compare documents from different raters, containing the same Classes and Codes, do as follows:

▪Make sure only the files to be compared are opened inside Mangold INTERACT.

▪Click Analysis - Reliability - Kappa ![]() in the toolbar.

in the toolbar.

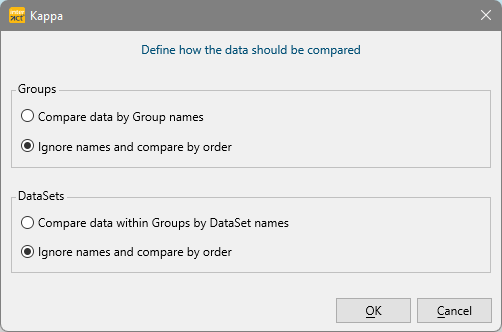

The following dialog appears, allowing you to select the level of comparison:

This selection is very import because the comparison is made per DataSet:

| By Order - If the order of all DataGroups and DataSets is identical in the separate documents, you can ignore the names. In this case use the second option! |

| By Name - If the order of the DataSets within the separate data files is not identical, the DataGroups and DataSets need to have the exact same name entered into the description field, for each of the corresponding Sets and Groups, in both documents. |

▪Select the applicable structure and confirm the upcoming dialog with OK.

Next, the Kappa Parameter dialog appears.

| TIP: | Merge the relevant files for 'Observer A' in one compilation file and merge the observations of 'Observer B' in a second compilation file. KAPPA is largely based on probability, that is why larger data pools result in a better/more realistic KAPPA value. |

IMPORTANT: For calculating Kappa, DO NOT merge files from different Coders!