The KAPPA coefficient is always calculated between the observations of two observers (represented by two INTERACT Documents).

The Kappa coefficient calculated by INTERACT takes care about the fact that a coder could have given more than one code at a time whereas the standard Cohen's Kappa does not (see description below).

INTERACT also takes care of cases where one coder has detected certain behavior whereas the other one has detected nothing. This is not taken into account by most Kappa calculation programs, too.

The Cohen's Kappa calculation in INTERACT is based on following formula:

K = (Pobs – Pexp) / (1 – Pexp) |

Where Pobs is the proportion of agreement actually observed. Pexp is the proportion expected by chance. Pobs is computed by summarizing the tallies representing agreement (the values on the grey diagonal in the result matrix), divided by the total number of tallies. Pexp is computed by summarizing the chance agreement probabilities for each Class.

A practical example will follow. The two Documents used for this example can be found in the ..\Mangold INTERACT Data\Demo\English directory. (Kappa list 1.xiact and Kappa list 2.xiact)

Result matrix

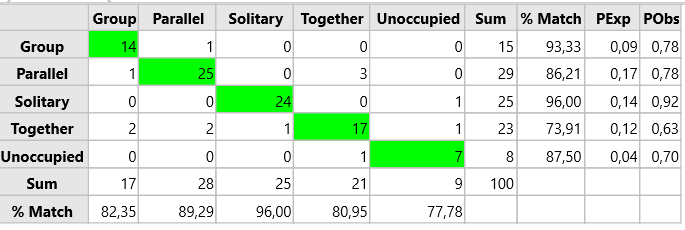

The results of two observers collected in two separate Documents, are displayed in the following matrix.

All Codes coded by both observers in the same time interval, are summarized. The values in the grey fields building an upper-left, lower-right diagonal will be referred to as matching codes. These values are calculated using our Kappa Pair Finding Routine.

The "Totals" shown in the last row and last column of the matrix are needed for calculating of the expected observational match.

•

In this example observer A has coded "group" 17 times, whereas observer B has coded "group" 16 times. But only 15 times this code was given by both observers in the same time interval!

In case of the 17 times observer A has coded "group", observer B has once coded "Parallel" and once "Together".

The proportion of agreement actually observed is referred to as Pobs and computed as follows:

(The number of matching codes has to be summarized and its total is divided by the total number of tallies.)

Pobs = Summary of matching codes / Total number of given codes |

Pobs = (14+25+24+17+7)/100 = 87/100 = 0,87000 |

The proportion expected by chance is referred to as Pexp and computed as follows:

(For every code the total per column is multiplied with the total per row, those values have to be summarized and finally divided by the total number of tallies squared.)

Pexp = (C-total*R-total) / (Sum Total * Sum Total) |

Pexp = (17*15 + 28*29 + 25*25 + 21*23 + 9*8) / (100*100) |

If we use those figures in our Kappa formula, it will get us the following result:

K = (0,87 – 0,2247) / (1 – 0,2247) = 0,6453 / 0,7753 = 0,83232 |

Single Code Values

The P results listed per Code are UNRELATED to the Kappa value and based on what we refer to as 'edge sum probabilities', because one cannot simply apply the same rule to a single code as for the overall situation.

That is why the Pexpected and Pobserved values for the separate codes are calculated differently:

Pobs = obsp/(rsum+csum-obsp) |

Pexp = (rsum*csum)/(rsum+csum-(rsum*csum)) |

csum = Total per column / total number of tallies

rsum = Total per row / total number of tallies

obsp = Number of matches / total number of tallies

Example for 'Group':

Pobs = (14/100) / (15/100 + 17/100 - (14/100)) = 0,14 / (0,15 + 0,17 - 0,14) = 0,14/0,18 = 0,77778

Pexp = (0,15*0,17)/((0,15+0,17) - (0,15*0,17)) = 0,0255 / (0,32 - 0,0255) = 0,0255 / 0,2945 = 0,08659

The summary of all CSUM results = 1

The summary of all RSUM results = 1

•